Claude Code 2.0 Is Promising But Flawed

Sonnet 4.5 arrives to great acclaim, but the new features like checkpoint rewinds and usage monitoring seem half-baked compared to third-party alternatives. Also, my dog does a kickflip with Sora 2.

Two weeks ago, Anthropic looked like it was stumbling. Developers were bailing on Claude Code, OpenAI had just shipped GPT-5 Codex, and Sonnet itself was rumored to be degrading. But in the roller-coaster world of AI coding agents, scripts flip fast and Anthropic is once again flexing on the competition with the release of Claude Sonnet 4.5, beating Codex in head-to-head tests and winning over both veteran engineers and hype bros across the internet.

But while the underlying model feels stronger than ever, Anthropic’s simultaneous release of Claude Code v2 is more mixed. While it’s great to see progress on critical quality of life features like checkpoints and usage monitoring, both of these new features seem half-baked, especially next to third party options like Git and Claude Monitor.

In this edition of AI Engineering Report, I will cover:

Online sentiment towards Sonnet including one YouTuber’s fascinating breakdown comparing Sonnet 4.5 directly to GPT-5-Codex on a web dev task.

Why I’m disappointed with the new /rewind feature in Claude Code v2

Why I’m disappointed with the new /usage feature in Claude Code v2

Using OpenAI’s new Sora 2 app to pretend my dog can do a kickflip

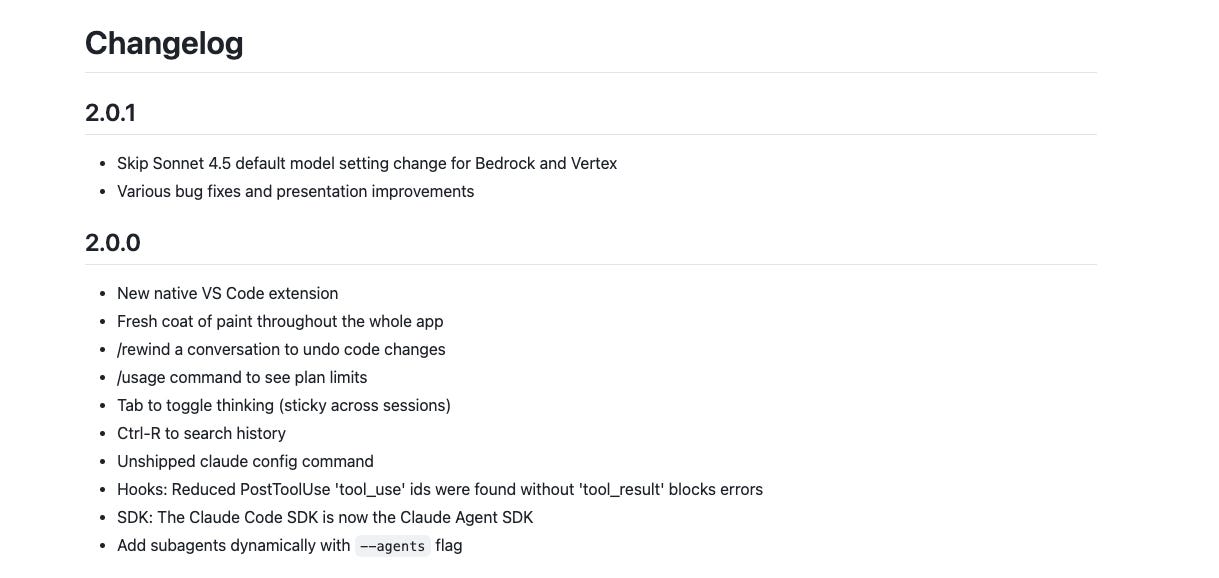

Claude 2.0.0 Release - What‘s New

If you’re curious about exactly what Claude Code v2 contains, you can find it on their CHANGELOG.md on their GitHub repo. Claude Code is not open source, but their GitHub repo is used for Issue Tracking, Changelogs, and some small examples. Here’s the v2 release notes:

There’s a few neat things in here, including tab-to-think, which is a way to force the model to think harder. Sonnet is now outperforming Opus on most tasks - raising the question of whether there’s even a point to Opus - but like GPT-5 it can either respond quickly or think harder for a better thought-out response. The straightforward way to get it to think harder is to simply use the words ‘think harder’ or ‘ultrathink’ in your prompt, but as an alternative you can now press tab to turn on a mode that will always do it.

Ctrl-R to search history is a nice quality of life feature mirroring bash terminals, although honestly, I rarely find myself wanting to do this.

The two features that stood out to me are /rewind and /usage , both of which I found disappointing, which I’ll get into.

But first, let’s talk about the Sonnet 4.5 hype.

Sonnet Puts Codex Back In Its Place

Despite weeks of speculation that Sonnet was deteriorating as a model, the new release of Sonnet 4.5 completely upended the sentiment. Simon Willison, one of the top 5 bloggers ever on Hacker News, wrote:

My initial impressions were that it felt like a better model for code than GPT-5-Codex, which has been my preferred coding model since it launched a few weeks ago.

Simon wasn’t alone. YouTuber Cole Medlin has 167k subscribers, and in his video titled Claude Sonnet 4.5 - The New Coding King? (Sonnet 4.5 vs. GPT 5 Codex), he set out to compare Claude 4.5 directly to Codex on a web dev task he orchestrated. The task was to add a Stripe integration to an existing web app.

On Sonnet 4.5, he had the following to say:

There we go , Claude Code with Sonnet 4.5 has finished the implementation and did it in 15 minutes, the entire Stripe integration, it’s very impressive. I actually did this exact same build with Opus 4.1 in the past and it took 35 minutes to build the whole thing, so more than 2.5 faster with 4.5….it made a couple mistakes, so it wasn’t quite a one-shot but it was pretty close.

Meanwhile, he ran the same test with GPT-5-Codex

It took 1 hour and 20 minutes in total while Sonnet 4.5 took 15 minutes, so the speed...was pretty disappointing. It seemed to do a lot of weird things like after editing a file, it would re-read the files and see what changes it made.

While he had minor issues with both implementations, he found Claude Code’s to be slightly closer to his desired output.

Overall, Cole put Claude Code ahead of Codex on both speed and quality.

As an aside, I found Cole’s benchmarking strategy using a Claude Code custom command (/execute-prp) that took a product requirements doc to be a really cool way of benchmarking these two agents.

Claude Code /rewind Command: Useful But Inferior To Git

On the Claude 2 announcement post, Anthropic describes the /rewind command in the following way:

Complex development often involves exploration and iteration. Our new checkpoint system automatically saves your code state before each change, and you can instantly rewind to previous versions by tapping Esc twice or using the /rewind command. Checkpoints let you pursue more ambitious and wide-scale tasks knowing you can always return to a prior code state.

When you rewind to a checkpoint, you can choose to restore the code, the conversation, or both to the prior state. Checkpoints apply to Claude’s edits and not user edits or bash commands, and we recommend using them in combination with version control.

I tested this command out by doing some work, then typing `/rewind`.

Upon typing the command, you get a list of all your previous messages, which are checkpointed. You can return to those checkpoints, and either revert code, revert the conversation, or both - undoing all code and/or conversation changes since the checkpoint.

If it does what it advertises it does, why am I disappointed? Because Git is still way better at solving the stated problem. Anthropic advertises checkpoints as a way to manage exploration and iteration of long-running tasks, but the checkpoint feature is far too rudimental to accomplish this.

When you revert the code, it destroys all the code changes made. Even though that’s what you asked for, destroying written code might still surprise you in a way you didn’t expect. With Git, you could just commit all the changes you want to destroy to a branch, then move off the branch. That way, the code is gone, but in an emergency where you deleted something that you actually needed , it’s still right there in a branch.

And if you need Git for anything, why not just use it for everything? In particular, I find that GitHub Desktop is an intuitive and visual tool for managing Git branches on your local machine, and lightyears ahead of this new checkpoint feature of Claude.

In fact, a Reddit user even built a tool that auto-commits after every Claude edit, essentially giving you checkpoints, but powered by Git’s full ecosystem that’s evolved over two decades of production use.

To me, there’s one great use case of the /rewind command, which is to clean up the conversation to avoid context rot. You manage the code in Git but you can not manage the conversation in Git. Still, I’m increasingly suspecting it’s better to make more frequent check-ins into files like CLAUDE.md / AGENTS.md as a way of organizing work, and starting clean fresh sessions based on those markdown notes, than it is to try to repair a session that went off the rail.

Overall, it’s nice to see the Claude Code team make some progress towards managing complex work all within one session and one tool, but it’s simply not there yet.

Claude Code /usage Command: Objectively Worse Than Claude-Monitor

Last week, I wrote a post highlighting that Claude Code saves all your session’s token usage, and cost, in a local ‘~/.claude/projects’ JSONL file and simply doesn’t show it to you. Despite being easily accessible on your local box, you need a third party tool called Claude Monitor to view it. So when I saw that Claude Code v2 released a new /usage command, I assumed they incorporated the essentials of claude-monitor into Claude Code.

To my surprise, the /usage command is even more crippled than /rewind. It only shows you the percent of usage left in the current session and week.

It doesn’t show the amount of tokens you used - only the percentage

It doesn’t show what the cost would be if you were using the API instead of a monthly plan.

It doesn’t differentiate between token usage and message usage, even though the quotas limit both

It doesn’t let you switch between a session view, daily view, and monthly view.

Meanwhile, claude-monitor does all of these things.

Furthermore, if you are on a monthly plan, the /cost command still simply tells you that there’s “no need to monitor cost”, hiding the information that you might save money paying for usage-based pricing via API instead of a monthly plan. And once again, I have to speculate that Anthropic doesn’t want you to know that you could save money, and are banking on people preferring the predictability of fixed-pricing over taking the time to realize they’re overpaying.

In short, claude-monitor is still a mandatory tool if you care about optimizing cost and token usage at all.

Using Sora 2 To Watch My Dog Do A Kickflip

This newsletter is primarily targeted towards engineers and will primarily cover engineering content, but I’m also closely following the AI media and arts trends, including being a participant in the excellent Machine Cinema AI art meetup group. Through their WhatsApp group, I was able to get an invite into the just-announced Sora 2 by OpenAI, and I have to say, it’s a lot of fun. The UI is clearly heavily inspired by TikTok, except instead of posting and editing videos with a traditional editor, you only prompt videos and edit them with followup prompts.

For now, I’ve mostly focused on pretending my dog Lucky could skateboard.

That one actually looked somewhat believable as he does not succeed at the kickflip and some dogs can actually skateboard a little bit. But I did push my luck and have him get some hang-time off of the half-pipe:

Thanks for reading AI Engineering Report! As always, please leave a comment on Substack or reply to this email with any thoughts.

The skateboarding videos!! >>>