OpenAI Dev Day: Sora 2 Stole the Show

Sora 2 is now available via API, why OpenAI gives direct competitors the mic on Main Stage, and how I feel they stack up against Anthropic after DevDay 2025

Yesterday, I had the opportunity to attend OpenAI Dev Day in San Francisco. Since this is such a big event, almost everyone writing about AI covered it, so I don’t want to re-hash the big announcements too much, but instead offer my personal impressions.

Let’s quickly recap the biggest announcements that I’ll discuss in this newsletter:

Sora 2 gets an API, meaning you can now build apps around generative video for surprisingly affordable pricing

OpenAI launched the Apps SDK, meaning you’ll be able to use MCP to build apps that are accessible directly within ChatGPT, hinting at a new ‘App Store’ moment

OpenAI launched AgentKit, a toolkit for building complex agent orchestrations, with a special focus on a visual drag-and-drop UI agent builder and an SDK for adding ChatGPT-like experiences in your app

Unrelated to DevDay, there’s also a big promo deal being offered for 40M free tokens for the #1 agent on TerminalBench at the end!

Takeaway #1: Sora 2 Stole The Show

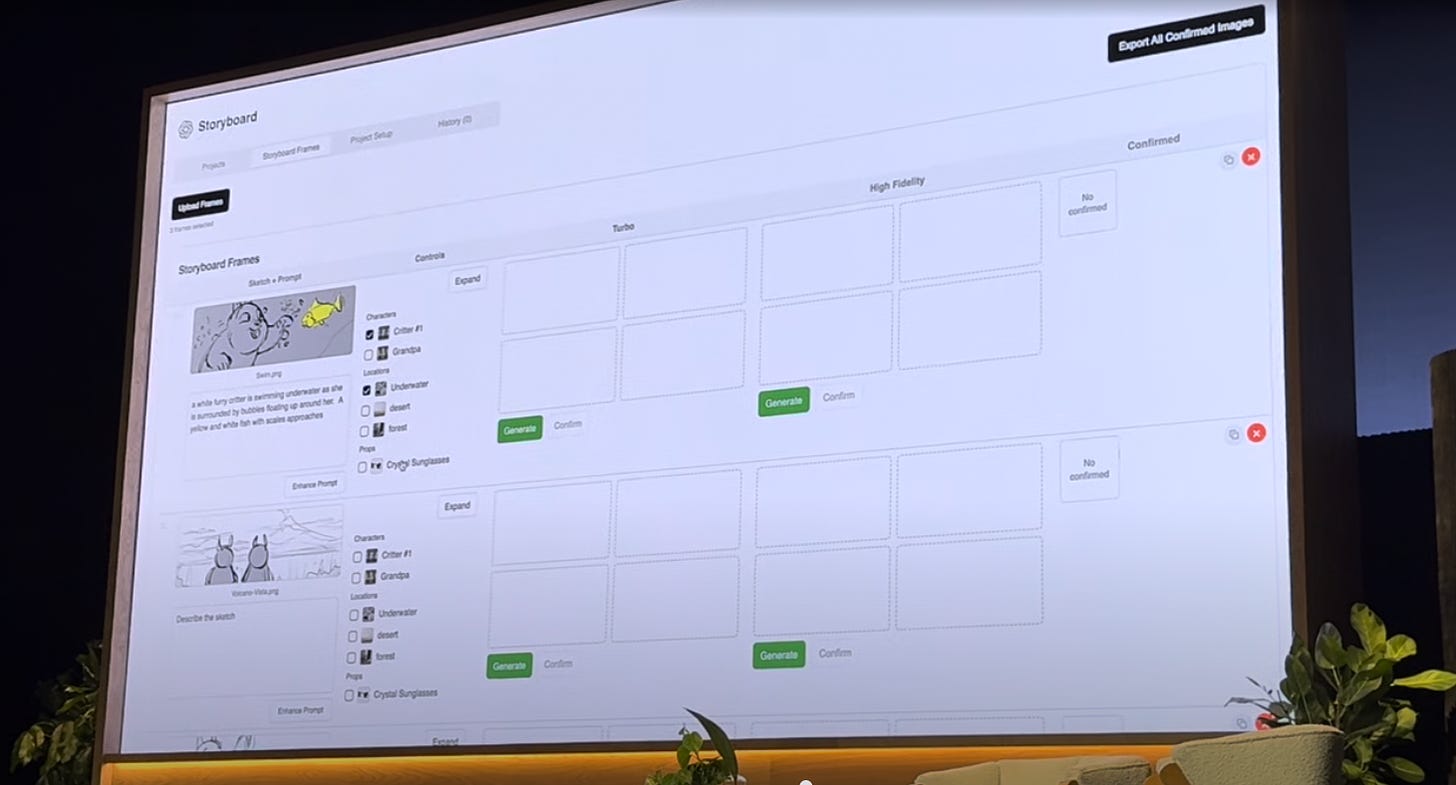

While I was looking forward to primarily developer talks, it was a creatives talk that blew my mind the most - Sora, ImageGen, and Codex: The Next Wave of Creative Production. They showed off a new “Storyboarding” tool that lets you organize consistent characters, settings, and related prompts, in a way designed to tell consistent stories with consistent characters.

As someone who’s attended many of Machine Cinema’s “GenJam” meetups, where we do things like make music videos with AI, AI-generated videos badly struggled with lack of consistency. Even if individual clips looked great, the inconsistency between the clips is what made the longer videos easily identifiable as “AI slop”. This storyboard tool looks to solve that problem.

I loved that they linked traditional human creativity to AI-related creativity by having a human draw a real novel character on an iPad, then loading that character into the tool and generating a Pixar-style short animated film with that character as the lead.

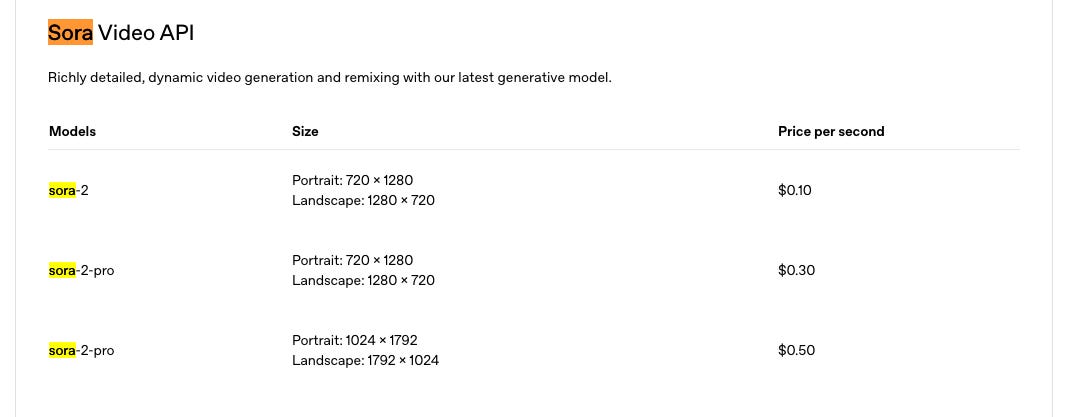

But there was great Sora 2 news for developers after all, as they also announced pricing for the Sora 2 API, at $0.10 per second of video for the regular model, $0.30 for the pro model, and $0.50 for the larger resolution.

While not exactly cheap in the general sense, this is fairly cheap by AI video generation standards, which is significantly more expensive than text or code generation.

One use case they showed off was a toy company using Sora to make proof of concepts for new toy and game designs. While interesting, given these models are expensive, I think the use cases we’ll see emerge the most will relate to advertisement, as video advertisements are both hard to make and one of the most monetizable use cases of AI video generation.

Takeaway #2: OpenAI Competes Against Yet Simultaneously Promotes Their Startup Ecosystem

One of the biggest announcements was Agent Builder, a visual toolkit for building agents. At first, I thought this was strictly a no-code tool, but an OpenAI employee I spoke with later told me that it’s actually just a visualization of an underlying SDK and you can still mix and match coding with the drag & drop UI.

Many noted this might put huge competitive pressure on startups in the visual agent space, notably n8n, Zapier, and YC-Backed Gumloop. Any startup in the AI space has to consider that if their market is even remotely big, they will be competing directly with the AI behemoths they are building on top of. It’s honestly unclear to me whether startups can differentiate in the space, or if “nobody got fired for buying from OpenAI” will demolish them.

But the silver lining for those startups is that OpenAI does not seem interested in sucking the air out of the room for competing startups, in fact, very much the opposite. OpenAI gave speaking slots to many direct competitors to Codex, including a highlighted main stage talk given to Cursor, and a lightning round talk given to Warp. It was somewhat fascinating to me that Sam Altman would make Codex moving from Research Preview to GA a central aspect of his keynote address, and then let a direct competitor on stage immediately after.

To me, the takeaway is that OpenAI just wants to grow the overall AI ecosystem, and they’re more than happy to compete with startups and let the best products win. It was inevitable that they would want to build their own coding agents, but it’s also wise that they’re willing to force their internal teams to compete against external ones and let the best agent win.

It seems Sam Altman is avoiding many pitfalls that Microsoft ran into in the past trying to build a walled garden on Windows, and there was even a callback to the heyday of Microsoft when they played a remixed version of the classic Steve Ballmer’s “Developers, Developers, Developers” meme , replaced with Sam Altman, and generated by Sora 2, on the main stage.

Takeaway #3: OpenAI Feels A Step Behind Anthropic on AI Coding But A Leap Ahead of Anthropic on Everything Else AI

Despite Codex moving to GA being a big announcement, everything about coding agents felt underwhelming. They demonstrated several use cases of MCP, that felt like something that might impress me 6 months ago, but in the AI coding world, that might as well be a decade ago. Nowadays, using MCP to connect to an external device is a big ‘meh’, and lest we forget, it’s OpenAI adopting a standard that Anthropic created.

Moreover, while both Twitter and Reddit seem genuinely divided on whether Codex or Claude Code is better, I’m personally still a bigger fan of Claude Code, particularly since Sonnet 4.5 came out. I wrote about this in the last edition of the newsletter, and it’s almost entirely because it’s faster , which makes it more fun for me. Nobody disputes that Sonnet is faster - even the OpenAI employees I spoke with. The only argument is whether Codex is better on larger, more complex tasks, but personally, I’d usually rather ‘pair’ on larger tasks interactively than let the agent do the entire thing myself.

But meanwhile, OpenAI feels significantly ahead of Anthropic on everything except coding. The biggest announcement here was AppKit, which lets people build apps within ChatGPT - although whether this will be realistic to monetize is still up in the air, it will certainly be an exciting moment for the AI consumer market.

Their AgentKit is clearly meant to move into the market of the ‘semi-technical’ agent builders, expanding OpenAI’s ambitions beyond pure devs.

And of course, Sora 2 is both one of the hottest consumer apps on the planet and a leading option for the creative industry to adopt AI into their workflows.

Given the conference was titled Developer Day, I thought it’d be more focused on coding. But of course, if you’re a developer, we don’t just want to code faster but also build awesome stuff for end-users, and almost all of these announcements enable that.

AppKit might trigger a new “App Store” gold rush (I’m very skeptical it will be easy to monetize, but it’s possible and great to be early)

AgentKit might bridge the gap between technical and non-technical teams for building advanced agent use cases

Sora 2 will open up a whole new class of application categories enabled by AI video

ICYMI: Free Tokens for #1 Agent On Terminal Bench

Something unrelated to DevDay, but worth noting, in case you like free stuff - Factory shared a tweet offering 40M free tokens to use their agent Droid with Sonnet 4.5. Factory’s Droid agent is actually the #1 ranked agent according to TerminalBench, so this is a lot of free value if you want to compare their product to Claude Code or Codex.

Thanks for reading AI Engineering Report. Reply to this email or leave a comment on Substack with any thoughts.

Love this perspective! Sora's consistency breakthroughs via API integrtion are truly game-changing.